Introduction to planar image tracking

Planar Image Tracking is used to detect and track textured planar objects in daily life. The so-called "planar" objects can be small items like a book, a business card, or a poster, or large targets like a graffiti wall. Such objects or scenes have flat surfaces and rich, non-repeating textures.

This article will outline the basic principles, expected effects, and platform adaptation solutions for planar image detection and tracking, helping you quickly understand the functional boundaries and key development points.

Basic principles

Understanding these principles helps developers optimize recognition performance and avoid common issues.

Core process

Loading and preprocessing stage:

- The system loads the target image, extracts a large number of visual feature points from it, generates a feature description of the image, and inserts it into the feature library.

- Images with richer textures are easier to identify and track. You can use the target image detection tool to check the recognizability of your target image in advance.

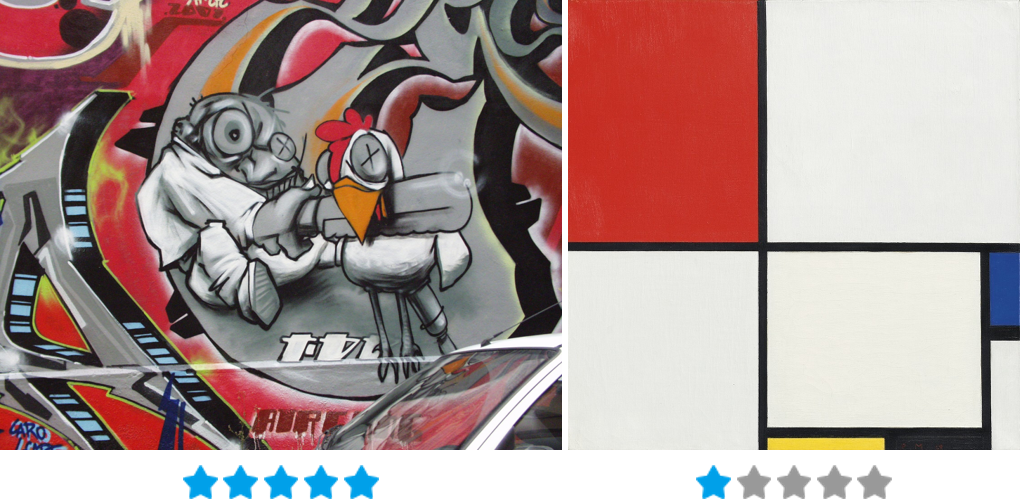

Reference image on the left: rich in texture and easy to recognize (5 stars); reference image on the right: simple elements, lack of texture, difficult to recognize (1 star).

We recommend using images with 4~5 star quality as your target images.Real-time detection and tracking stage:

- After the camera captures the frame, the system analyzes the feature points of the current frame and performs feature matching with the feature library of the target image.

- The PnP (Perspective-n-Point) algorithm is used to calculate the pose (position + rotation) of the image in 3D space.

- Once the target is successfully detected, the system enters tracking mode. At this time, the system compares the frames and analyzes the motion between frames to achieve real-time tracking.

Optimization mechanism:

- Tracking loss recovery: The system automatically redetects the target after brief occlusion or fast motion blur.

- Multi-target simultaneous tracking: Control the concurrency of a single Tracker through the

Simultaneous Numberparameter, enabling one Tracker call to track multiple targets simultaneously.

Technical limitations

- Only supports flat images (non-3D objects or dynamic content).

- Relies on environmental lighting; overly dark or overexposed conditions may cause detection difficulties or tracking loss.

- During detection, the camera cannot be too far from the target, ensuring the target image occupies at least 30% of the frame.

- Multi-target tracking is limited by device performance. Typically, PCs can track over 10 targets simultaneously, while mobile devices can track 4–6 flat targets.

Effects and expected results

After understanding the working mechanism and technical limitations of image detection and tracking, you also need to have an understanding of the effects that this feature can achieve. Clarifying these effects will help you set reasonable testing standards during the development process.

Ideal effect

- Accurate overlay: Virtual objects align with image edges.

- Fast detection: Ultra-low latency from app loading to successful detection.

- Stable tracking: Maintains tracking even when the image is rotated, moved, or partially occluded.

Non-ideal situations and countermeasures

| Phenomenon | Cause | User perception | Solution preview (see details in later sections) |

|---|---|---|---|

| Unable to recognize | Insufficient image texture or too small | Virtual object does not appear | Optimize the target image, use tools to detect recognizability |

| Tracking jitter | Target occupies too small a portion of the frame, insufficient trackable points | Virtual object shakes noticeably | Avoid being too far from the target image, set tracking mode to PreferQuality |

| Frequent loss | Rapid movement or complete occlusion of the image | Virtual object flickers/disappears | Stabilize the device/target image, or increase target size |

| Missing multi-image targets | Affected by hardware performance | Some target images cannot be tracked | Balance performance by setting a reasonable Simultaneous Number parameter |

Expected result verification method

- Development phase: Use a PC camera to preview through Unity editor Play mode.

- Testing phase: Use the official Sample scene or self-built test images to cover different lighting/angle/distance conditions.

Best practices for target images

The effectiveness of plane image tracking heavily depends on the quality of the target image. To ensure recognition success, it is recommended to follow the guidelines below when preparing target images.

Depending on the usage scenario, you can prepare target images in various ways: directly photographing the target object from a frontal angle with a camera, or designing the pattern first and then printing it. Whether it's a photo or a design draft, both can serve as template images.

Basic requirements

- Image format: JPG or PNG is recommended.

- Transparency handling: If the image has a transparent channel, the system will process it with a white background. To avoid unexpected results, please avoid using transparent channels.

Core optimization points

Ensure rich texture details

The template image should have sufficient details and edge variations, avoiding solid colors or simple graphics.

Reference left: images with rich textures can be detected; reference right: solid-color images cannot be detected

Avoid repetitive patterns

Patterns with regular repetition (such as checkerboards or stripes) reduce the uniqueness of feature points.

Reference: repetitive pattern images cannot be tracked

Fill the frame with content

The subject should occupy the entire frame as much as possible, minimizing blank areas.

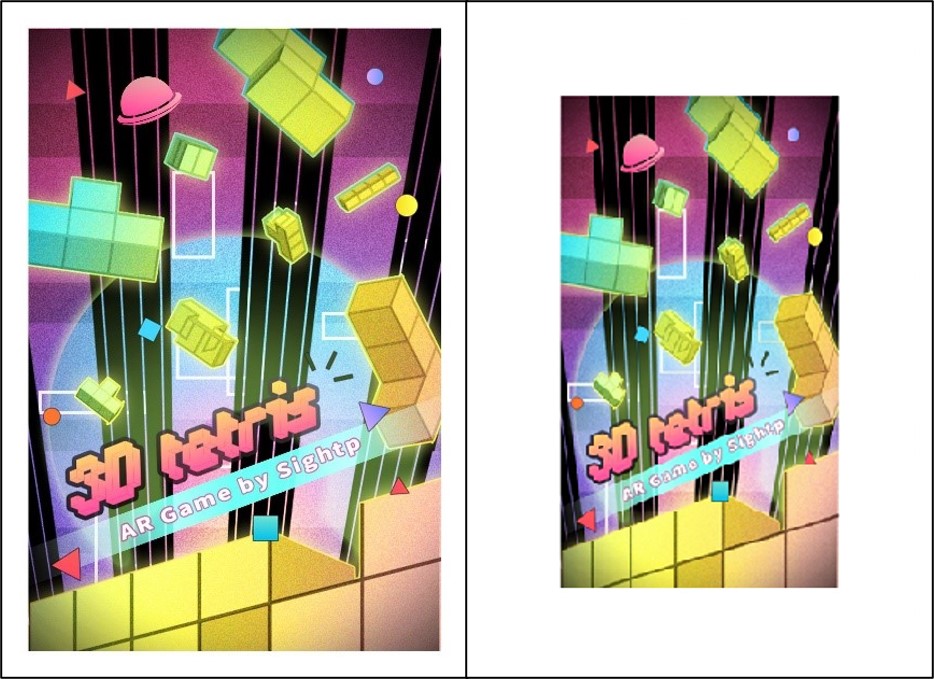

Reference: the left image with a full subject is easier to detect and track than the right image with excessive blank space

Control the aspect ratio

The image should not be overly elongated, with the shorter side being at least 20% of the longer side.

Reference: elongated images are difficult to track

Choose an appropriate resolution

Recommended range: between SQCIF(128×96) and QVGA(1280×960).

Too small: insufficient feature points, leading to reduced recognition rates.

Too large: unnecessary increase in memory overhead and computation time when generating Target data.