AR data flow

This article introduces the data flow in EasyAR Sense. EasyAR Sense uses component-based APIs, and components are connected through data flow.

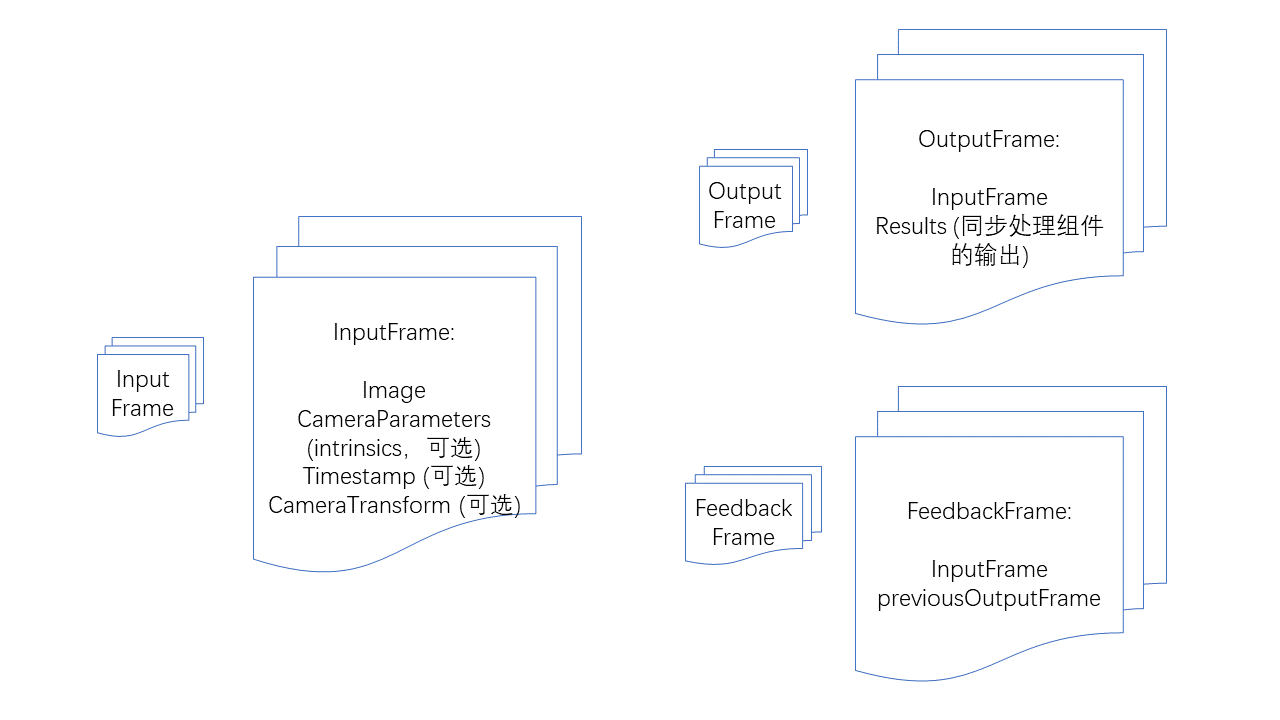

Input and output data

InputFrame: Input frame. Contains image, camera parameters, timestamp, camera transformation relative to the world coordinate system, and tracking status. Among these, camera parameters, timestamp, camera transformation relative to the world coordinate system, and tracking status are all optional, but specific algorithm components may have specific requirements for the input.

OutputFrame: Output frame. Contains the input frame and the output results of synchronous processing components.

FeedbackFrame: Feedback frame. Contains an input frame and a historical output frame, used for feedback-based synchronous processing components such as ImageTracker.

Camera component

CameraDevice: Default camera on Windows, Mac, iOS, and Android.

ARKitCameraDevice: Default ARKit implementation on iOS.

ARCoreCameraDevice: Default ARCore implementation on Android.

MotionTrackerCameraDevice: Implements motion tracking by fusing multiple sensors to calculate the 6DoF coordinates of the device. (Only supports Android)

ThreeDofCameraDevice: Adds 3DoF orientation on top of the default camera.

InertialCameraDevice: Adds 3DoF orientation and plane-based inertial-estimated translation on top of the default camera.

custom camera device: Custom camera implementation.

Algorithm components

Feedback-based synchronous processing component: needs to output results every frame along with the camera image and requires the previous frame's processing results to avoid mutual interference.

ImageTracker: Implements detection and tracking of planar images.

ObjectTracker: Implements detection and tracking of 3D objects.

Synchronous processing components: require results to be output with each frame of camera images.

SurfaceTracker: Implements tracking of environmental surfaces.

SparseSpatialMap: Implements sparse spatial mapping, providing the ability to scan physical spaces while generating point cloud maps and performing real-time localization.

MegaTracker: Implements Mega spatial localization.

Asynchronous processing components: do not require results to be output with each frame of camera images.

CloudRecognizer: Implements cloud recognition.

DenseSpatialMap: Implements dense spatial mapping, which can be used for effects like collision and occlusion.

Component availability check

All components have an isAvailable function that can be used to determine whether the component is available.

A component may be unavailable due to the following reasons:

Not implemented on the current operating system.

Required dependencies are missing, such as ARKit or ARCore.

The component does not exist in the current version (variant), such as certain features being absent in some lightweight versions.

The component is unavailable under the current license.

Always check if the component is available before using it and provide appropriate fallback or prompts.

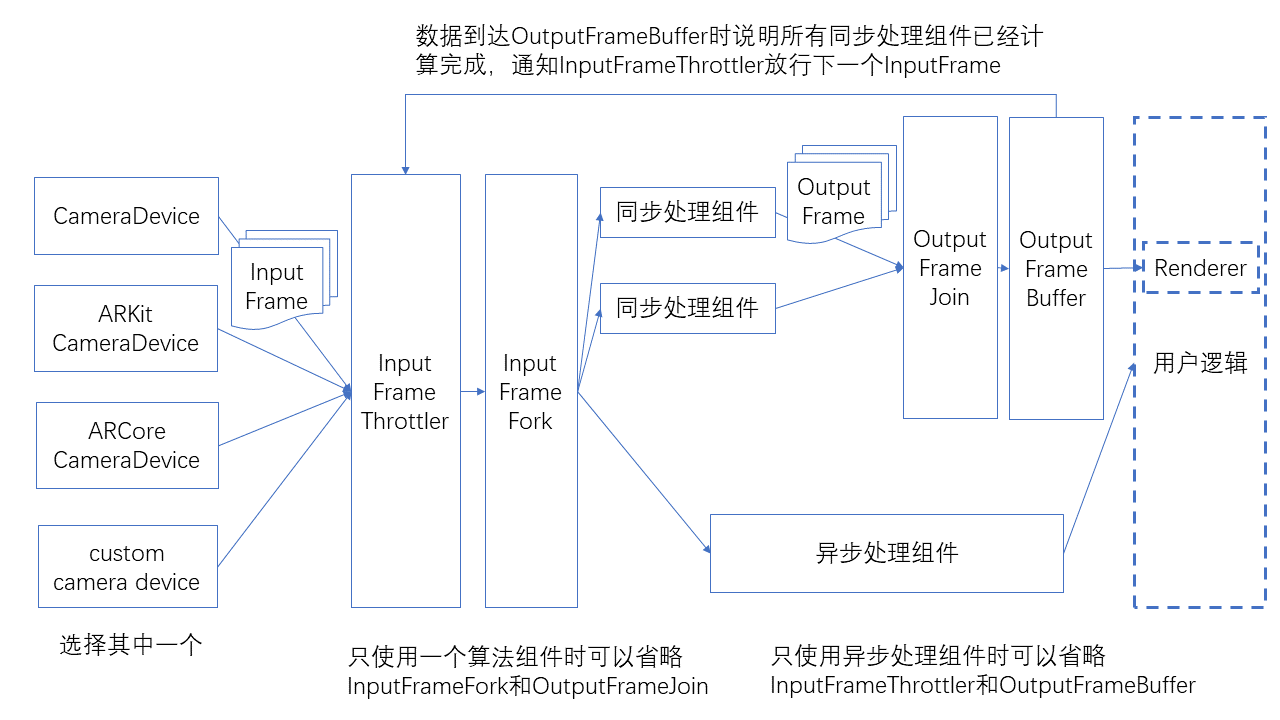

Data flow

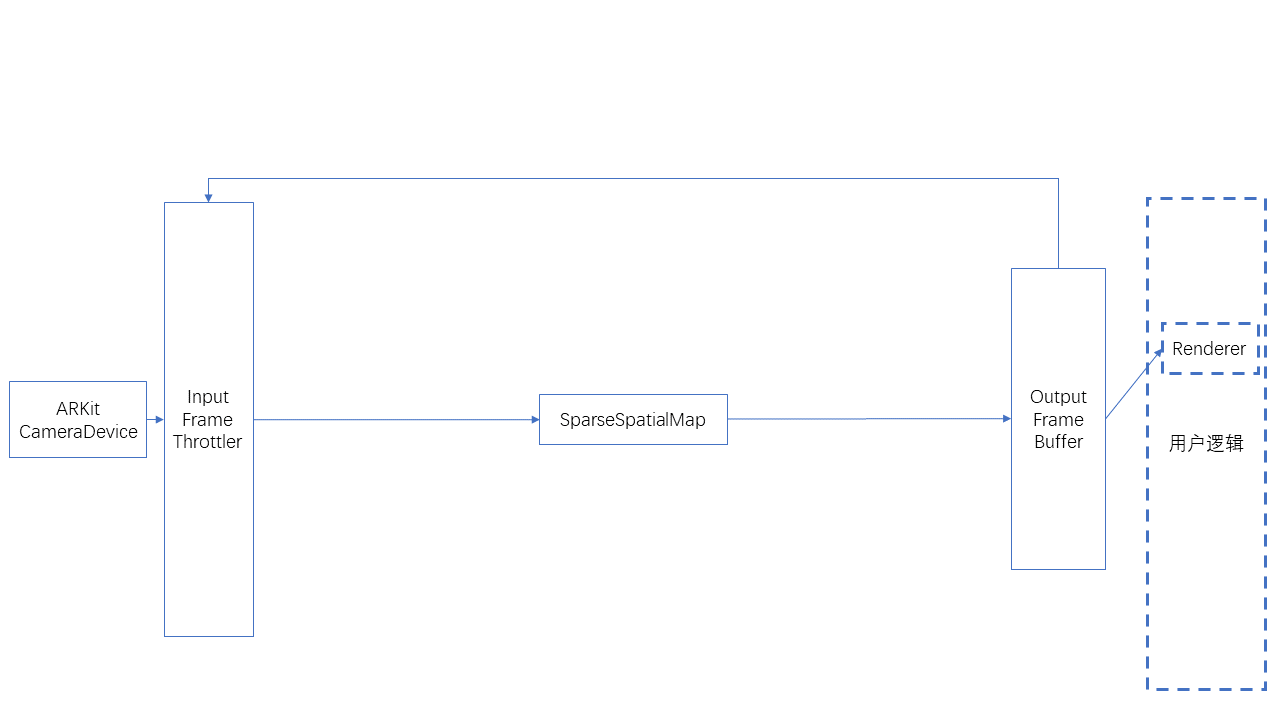

The components are connected as shown in the following figure.

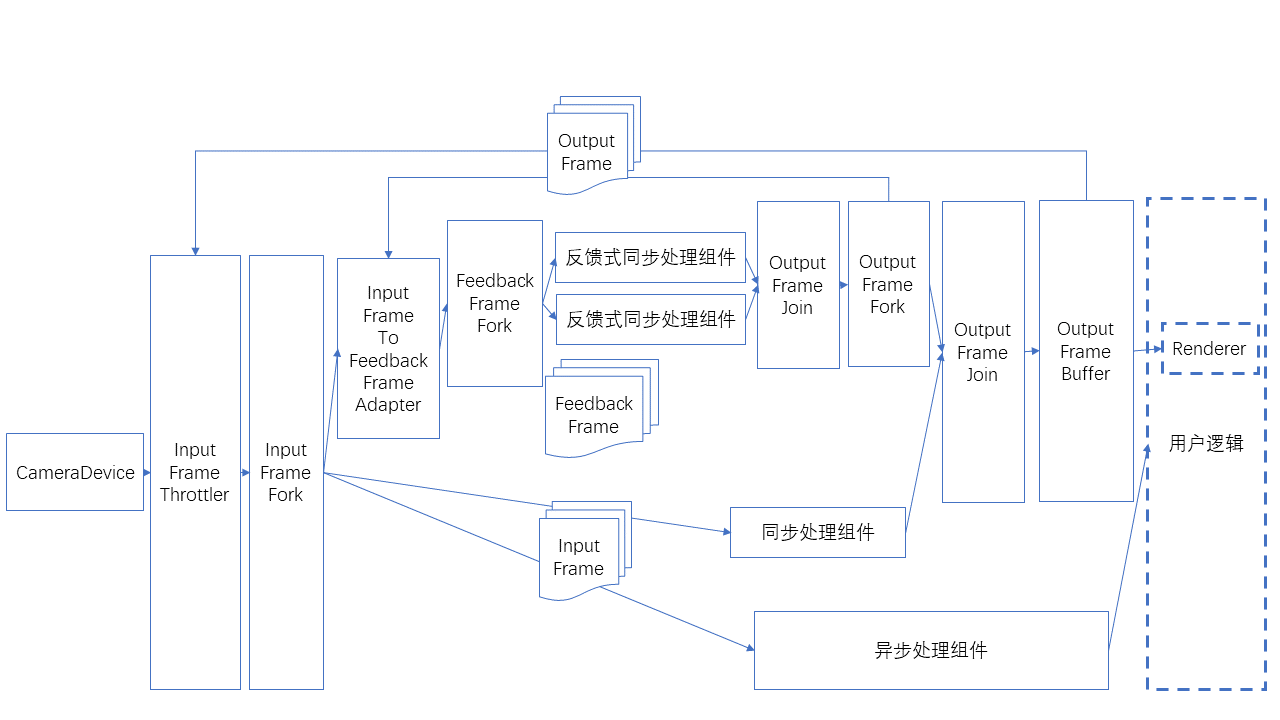

There is a special usage of input as a feedback frame, as shown in the following figure.

Data flow helper classes

Data flow emission and reception ports, each component should include these ports

SignalSink / SignalSource: Receive/emit a signal (no data).

InputFrameSink / InputFrameSource: Receive/emit an InputFrame.

OutputFrameSink / OutputFrameSource: Receive/emit an OutputFrame.

FeedbackFrameSink / FeedbackFrameSource: Receive/emit an FeedbackFrame.

Branching and merging of data flow

InputFrameFork: Split an InputFrame into multiple parallel emissions.

OutputFrameFork: Split an OutputFrame into multiple parallel emissions.

OutputFrameJoin: Merge multiple OutputFrame into one and combine all results into Results. Note that the connections of its multiple inputs should not be made while data is flowing, otherwise it may enter a state where it cannot output. (It is recommended to complete the data flow connection before starting the Camera.)

FeedbackFrameFork: Split an FeedbackFrame into multiple parallel emissions.

Throttling and buffering of data flow

InputFrameThrottler: Receive and emit InputFrame, but only emit one at a time. The next InputFrame will only be emitted after receiving a trigger signal. When multiple InputFrame are received, subsequent InputFrame may overwrite the previous ones.

OutputFrameBuffer: Receive and buffer OutputFrame, waiting for user polling. A signal can be emitted when OutputFrame is received.

Connect the signal emitted by OutputFrameBuffer to InputFrameThrottler to complete the entire throttling process.

Conversion of data flow

InputFrameToOutputFrameAdapter: Can directly wrap an InputFrame into an OutputFrame for rendering and display.

InputFrameToFeedbackFrameAdapter: Can wrap an InputFrame and an FeedbackFrame into an FeedbackFrame for feedback-based synchronous processing components.

Limitation on the number of inputframes

CameraDevice can set bufferCapacity, which is the maximum number of InputFrame to emit. The current default value is 8.

Custom cameras can be implemented using BufferPool.

For the number of InputFrame required by each component, refer to the API documentation of each component.

If the number of InputFrame is insufficient, it may cause the data flow to stall, resulting in rendering stalling.

If the number of InputFrame is insufficient, it may also cause the first startup rendering to not stall but switching to the background or pausing/starting components to cause rendering stalling. Pay attention to covering this during testing.

Connect and disconnect

It is not recommended to connect and disconnect during the operation of the data flow.

If it is necessary to connect and disconnect during operation, it should be noted that it can only be done on a cut edge (an edge that, when removed, splits the data flow into two parts), and not on an edge of a cycle (here, a cycle refers to a cycle formed by edges when the data flow is viewed as an undirected graph), the input of OutputFrameJoin, or the sideInput of InputFrameThrottler. Otherwise, it may result in the data flow getting stuck in nodes such as OutputFrameJoin and InputFrameThrottler, unable to output.

Algorithm components have start/stop functionality. When stopped, frames will not be processed but will still be output from the component, just without results.

Typical usage

The following is the usage of a single ImageTracker, which can be used to recognize and track non-repeating planar targets.

![]()

The following is the usage of a single ImageTracker, which can be used to recognize and track repeating planar targets.

![]()

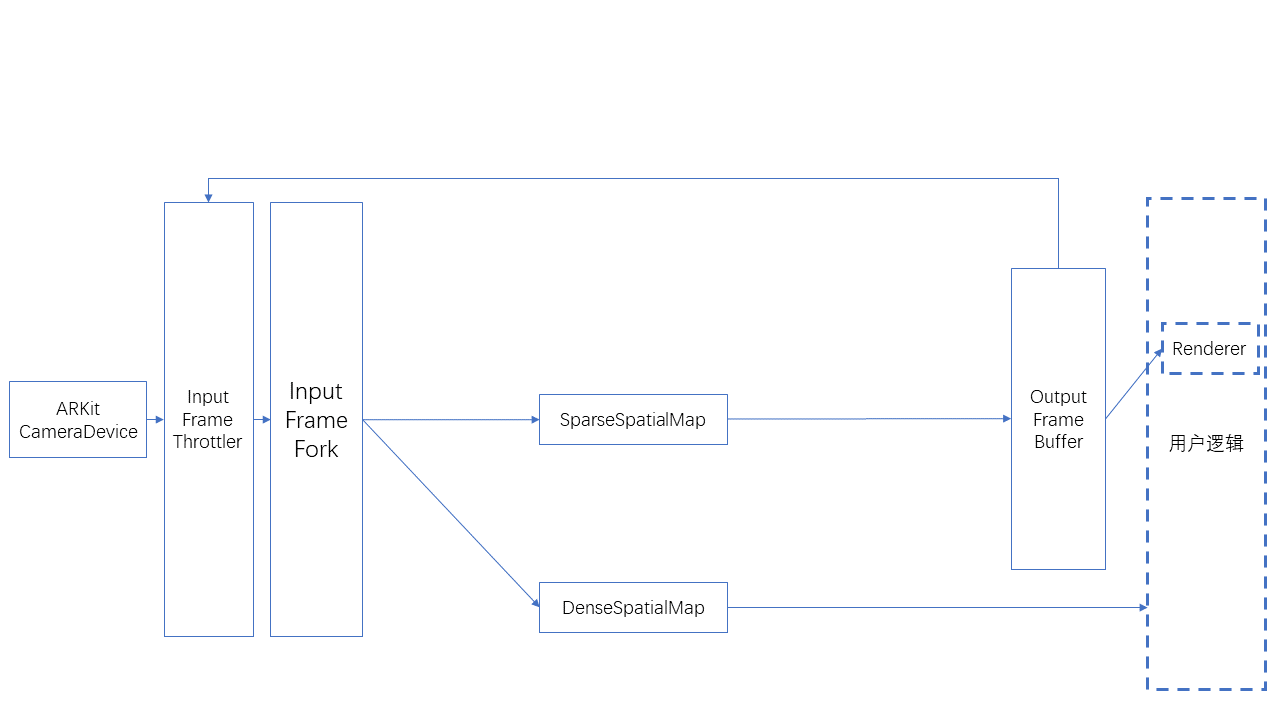

The following is the usage of SparseSpatialMap, which can be used to implement sparse spatial map construction, localization, and tracking.

The following is the usage of SparseSpatialMap and DenseSpatialMap together, which can be used to implement sparse spatial map construction, localization, tracking, and dense spatial map generation.