Make Head-Mounted Display Support EasyAR

This article introduces how to use the head-mounted display extension package template of EasyAR Sense Unity Plugin to develop an EasyAR extension package that supports head-mounted display devices.

Before you start

Before diving into development, you need to understand how to use the EasyAR Sense Unity Plugin.

- Quickstart

- Run the AR Session sample, the ImageTracking_Targets sample for image tracking, and the SpatialMap_Dense_BallGame sample for dense spatial mapping. Their performance is similar on mobile devices and headsets.

Headset plugin development involves some fundamental functionalities, so it's essential to familiarize yourself with the following:

- Understand AR Session

- Learn about frame data sources and external frame data sources

Additionally, you should be familiar with how to develop a Unity package.

Prepare the device for AR/MR

Prepare the motion-tracking/VIO system

Ensure the device tracking error is under control. Some EasyAR features like Mega can reduce the accumulated error of the device to some extent, but large local errors can also make EasyAR's algorithm unstable. Generally speaking, we expect the VIO drift to be within 1‰.

Prepare the display system

Ensure that when a virtual object with the same size and outline as a real-world object is placed in the virtual world, and its relative transformation to the virtual camera is the same as the corresponding real-world object to the device, the virtual object can be displayed seamlessly on the real object, and moving the device or turning the head will not break the display effect. You can refer to the effect of Vision Pro.

Prepare the device SDK

Ensure that there is an API to provide external input frame data. This data should be generated at two and only two time points in the system, and it is necessary to ensure that there is no misalignment of data.

Using the HMD extension package template

Import the EasyAR Sense Unity Plugin (package com.easyar.sense) by installing the plugin from a local tarball file via Unity's Package Manager window. Extract the HMD extension template (package com.easyar.sense.ext.hmdtemplate) into the Packages directory of your Unity project and rename the Samples~ folder to Samples.

At this point, you should see the following directory structure:

.

├── Assets

└── Packages

└── com.easyar.sense.ext.hmdtemplate

├── CHANGELOG.md

├── Documentation~

├── Editor

├── LICENSE.md

├── package.json

├── Runtime

└── Samples

└── Combination_BasedOn_HMD

Tip

If needed, you can use any method allowed by Unity to import the EasyAR Sense Unity Plugin and place the HMD extension template.

If you choose not to use the template, you can also refer to Unity's guide for creating custom packages to create a new package.

If the device SDK is not organized using Unity's package system, you need to extract the HMD extension template into the Assets folder of Unity, then delete the package.json and any files with the .asmdef suffix from the extracted files. Note that in this usage scenario, users who use both the device SDK and EasyAR will not be able to obtain proper version dependencies.

Complete the runtime input extension

Follow the Create image and device motion data input extension method, modify Runtime/HMDTemplateFrameSource.cs, and complete the input extension for the head-mounted display. This is the main development task of the extension package.

Complete the editor menu

Modify the "HMD Template" string in the MenuItems class to represent the device name. If other custom editor features are needed, additional scripts can also be added.

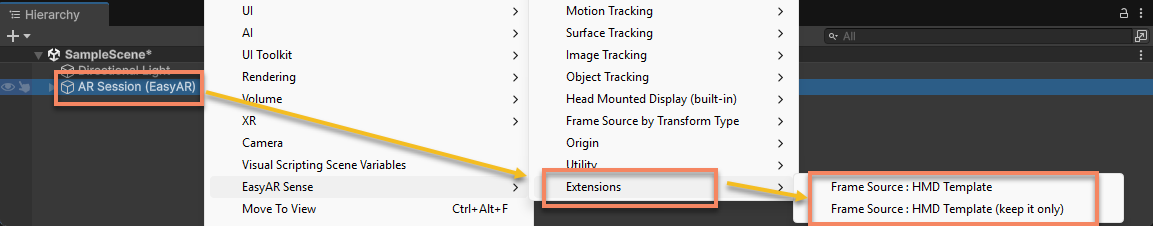

When developers right-click on AR Session (EasyAR) in the Hierarchy view, the following menu items will appear:

EasyAR Sense>Extensions>Frame source : [Device Name]: Adds a frame source for this device to the current session.EasyAR Sense>Extensions>Frame source : [Device Name (keep it only)]: Adds and retains only one frame source for this device in the current session.

Complete application example

The example is located in Samples/Combination_BasedOn_HMD. For simplicity, there is no code in the example template, and all AR functionality can be completed with scene content and configuration.

Add content that supports device operation to the scene.

Tip

If needed, you can also do the opposite: use a scene that can run on the device, then add EasyAR components and other objects from the sample scene to the scene.

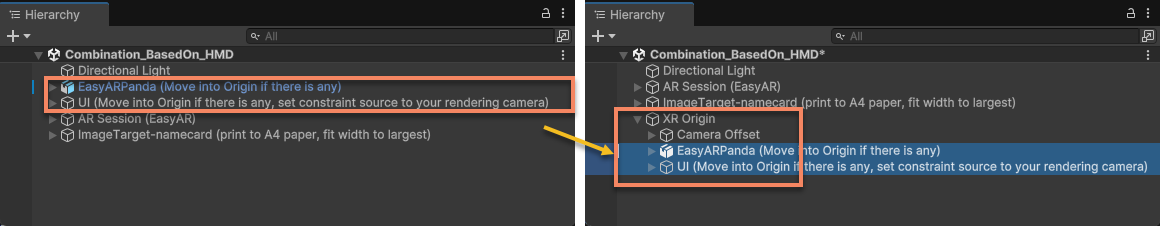

Modify the objects designed to be placed under the session origin.

If a session origin is defined in the scene, move

EasyARPandaandUIunder the origin node.

EasyARPandaprovides a reference for device motion tracking behavior, which helps identify the cause when tracking is unstable.The text in parentheses in these object names is a hint for extension developers and can be deleted:

(Move into Origin if there is any)(Move into Origin if there is any, set constraint source to your rendering camera)

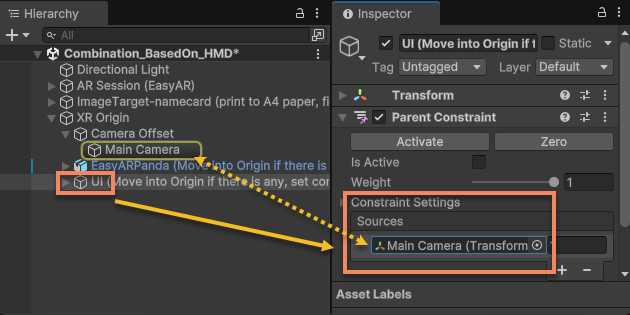

Configure

HUDbutton behavior.Set the constraint source of

UIto the virtual camera to ensure theHUDbuttons work as expected.

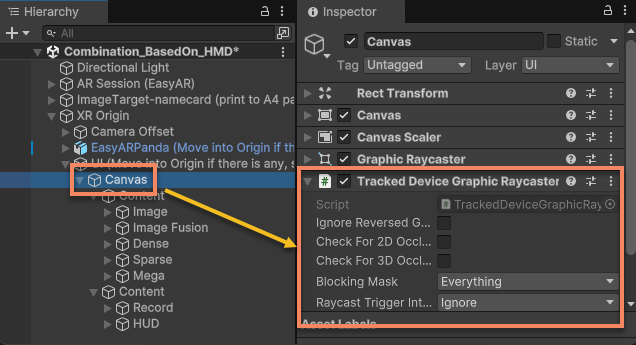

Configure

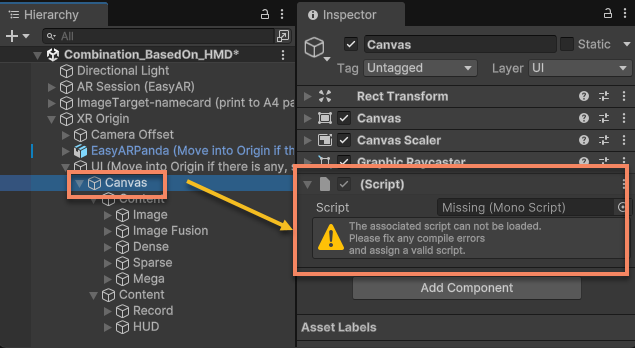

Canvasraycast functionality.Modify the

Canvasunder theUInode to ensure raycast works, so that all UI buttons and switches function as expected.The template has pre-added XR Interaction Toolkit's Tracked Device Graphic Raycaster under the

Canvasnode, which will be visible after importing the corresponding package.

If XR Interaction Toolkit is not used when running on the device, you will see a missing script prompt similar to the one below. You can delete it and add the raycaster component required by the device.

Next steps

- Before proceeding further with the extension package, first run bring-up for the input extension

- Once everything is completed, you can prepare to publish the extension package