Image cloud recognition WeChat mini program example description

This article will take you through an in-depth analysis of the sample code to help you understand and develop your own instance based on it.

For sample download and configuration instructions, please refer to Quick start.

Identify target settings

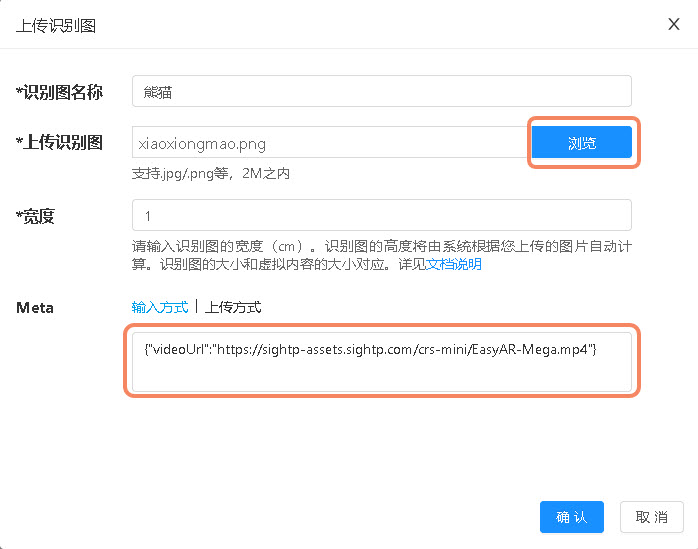

In cloud recognition management, upload a recognition image.

Recognition image name: Give the recognition target a name, such as "Panda".

Upload recognition image: Select and upload an image. The image used in this example is:

Width: The width of the recognition image (cm). The height of the recognition image will be automatically calculated by the system based on the image you uploaded. The size of the recognition image corresponds to the size of the virtual content, which is not used in this example.

Meta: Additional information, generally used to store AR content information. The content used in this example:

{"modelUrl": "https://sightp-assets.sightp.com/crs-mini/xiaoxiongmao.glb", "scale": 0.4}

Identify target acquisition

When calling the cloud recognition API and identifying a target, the target information will be returned with the following structure:

{

"statusCode" : 0,

"result" : {

"target" : {

"targetId" : "375a4c2e********915ebc93c400",

"meta" : "eyJtb2RlbFVybCI6ICJhc3NldC9tb2RlbC90cmV4X3YzLmZieCIsICJzY2FsZSI6IDAuMDJ9",

"name" : "demo",

"trackingImage": "/9j/4AAQSkZJRgABAQ************/9k=",

"modified" : 1746609056804

}

},

"date" : "2026-01-05T05:50:36.484Z",

"timestamp" : 1767592236484

}

Tip

For complete field information, refer to the API reference.

Decode the meta using base64 to obtain the original meta information.

// data is the returned data

const meta = data.result.target.meta;

const modelInfo = JSON.parse(atob(meta));

Note

The atob method is not available in WeChat Mini Programs and needs to be implemented manually.

The implementation method can be found in the example directory libs/atob.js file.

Main code description

components/easyar-cloud/easyar-cloud.js

Methods such as using

wx.createCameraContextto open the camera, capture images, and access cloud recognition.components/easyar-ar/easyar-ar.js

Methods such as using xr-frame to open the camera, capture images, access cloud recognition, play videos, and render templates.

components/libs/crs-client.js

Methods such as token generation and cloud recognition access.

Warning

Do not directly use API Key and API Secret on client-side applications (e.g., web, WeChat Mini Programs, etc.).

This is for demonstration purposes only. For production environments, generate the token on the server side.

Deep understanding of the code

If you wish to delve deeper into cloud recognition development, it is highly recommended to read the sample source code. Based on this, you can try modifying and extending the source code.

Tip

The following explanation assumes you already possess a certain level of HTML and JavaScript development skills. If you have not yet mastered these fundamentals, it is advisable to systematically learn the relevant knowledge first, in order to better understand the subsequent content.

The XR/3D engine used on WeChat Mini Programs is XR-FRAME. If you are unfamiliar with it, it is recommended to refer to the documentation first.

We will use rendering a 3D model as an example to introduce the main source code explanations in the sample.

UI and scene handling

File components\easyar-ar\easyar-ar.wxml description.

XR scene and marker settings.

<xr-scene ar-system="modes:Marker" id="xr-scene" bind:ready="handleReady" bind:ar-ready="handleARReady" bind:tick="handleTick">

<xr-node>

<xr-ar-tracker wx:if="{{markerImg != ''}}" mode="Marker" src="{{markerImg}}" id="arTracker"></xr-ar-tracker>

<xr-camera id="camera" node-id="camera" position="0.8 2.2 -5" clear-color="0.925 0.925 0.925 1" background="ar" is-ar-camera></xr-camera>

</xr-node>

<xr-shadow id="shadow-root"></xr-shadow>

<xr-node node-id="lights">

<xr-light type="ambient" color="1 1 1" intensity="2" />

<xr-light type="directional" rotation="180 0 0" color="1 1 1" intensity="1" />

</xr-node>

</xr-scene>

Tip

markerImg is the address of the recognition image, which is returned when cloud recognition identifies the target.

Business processing

File components\easyar-ar\easyar-ar.js main code explanation.

handleTick() {

// Capture screenshot and send to cloud recognition service

this.capture().then(base64 => this.crsClient.searchByBase64(base64.split('base64,').pop())).then(res => {

// Cloud recognition returned result

console.info(res)

// Return 0 means no target recognized

if (res.statusCode != 0) {

return;

}

const target = res.result.target;

// Set marker

this.loadTrackingImage(target.trackingImage.replace(/[\r\n]/g, ''));

// Detect from meta information whether it is a model or a video

try {

const setting = JSON.parse(atob(target.meta));

if (setting.modelUrl) {

this.loadModel(target.targetId, setting);

} else if (setting.videoUrl) {

this.loadVideo(target.targetId, setting);

}

} catch (e) {

console.error(e);

}

}).catch(err => {

console.info(err)

});

},

capture() {

// Get camera image

const opt = { type: 'jpg', quality: this.properties.config.jpegQuality };

if (this.scene.share.captureToDataURLAsync) {

return this.scene.share.captureToDataURLAsync(opt);

}

return Promise.resolve(this.scene.share.captureToDataURL(opt));

},

Tip

For complete code, please refer to the example source file.

Cloud recognition processing

File components/libs/crs-client.js main method description.

Send image base64 data to the cloud recognition service API.

searchByBase64(img) {

const params = {

image: img,

notracking: 'false',

appId: this.config.crsAppId,

};

return this.queryToken().then(token => {

return new Promise((resolve, reject) => {

wx.request({

url: `${this.config.clientEndUrl}/search`,

method: 'POST',

data: params,

header: {

'Authorization': token,

'content-type': 'application/json'

},

success: res => resolve(res.data),

fail: err => reject(err),

});

});

});

}

Expected effect

- Example homepage

- Rendering model effect