Use EasyAR samples on XR headsets or glasses

EasyAR provides unified samples for all headsets. The samples contain no code and are entirely implemented through scene configurations. For the usage of the features themselves, you can refer to the implementation of the corresponding features in the Android/iOS mobile samples.

The headset sample names are Combination_BasedOn_*, for example, the Pico sample is Combination_BasedOn_Pico. This sample demonstrates most EasyAR features in a single scene, which can be dynamically toggled, used individually, or opened simultaneously.

Preparation

- Confirm that your headset or glasses are currently on the EasyAR supported list

- Download and import the EasyAR Unity plugin package

- Download and import the EasyAR Unity XR device extension package

- Obtain an EasyAR license suitable for XR headsets or glasses. The available license types for headsets or glasses include:

- EasyAR Sense 4.x XR License trial version (trial, self-activated on the EasyAR website)

- EasyAR Sense 4.x XR License official version (paid, please contact sales for purchase and activation)

- EasyAR Sense 4.x XR License enterprise version (for enterprise SDK use)

Caution

Only XR License is allowed on headsets and glasses. Regular licenses cannot enable EasyAR functionality.

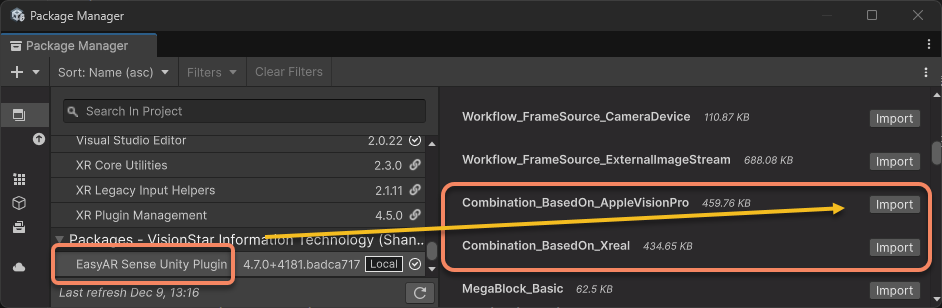

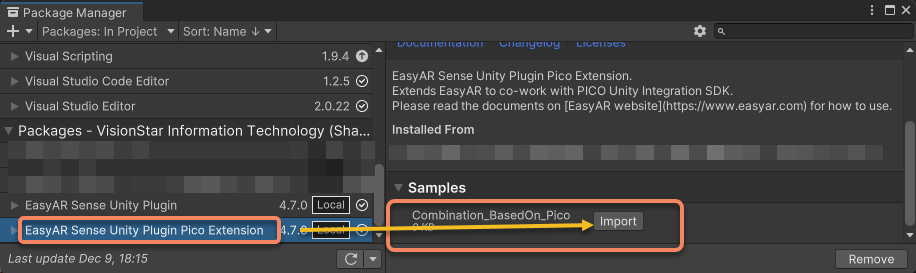

Import official samples

Samples for built-in supported devices are located in the EasyAR Unity plugin package. Import the required samples separately based on the device.

Samples for devices supported via extensions are distributed with the corresponding headset extensions. You can use Unity to import the samples into the project. Take Pico as an example.

Sample packaging and running

Complete the headset project configuration and sample usage instructions respectively.

Strictly follow the official documentation and instructions of the corresponding headset for the corresponding configuration. The EasyAR documentation will not cover related content.

Configure according to the platform instructions in the EasyAR documentation.

Android: Please refer to Android project configuration

visionOS: Please refer to visionOS project configuration

XREAL requires additional XREAL project configuration besides the Android platform settings.

Sample packaging Package the sample in Unity and deploy it to the device for running. For specific methods, see Running samples on Unity.

Usage instructions

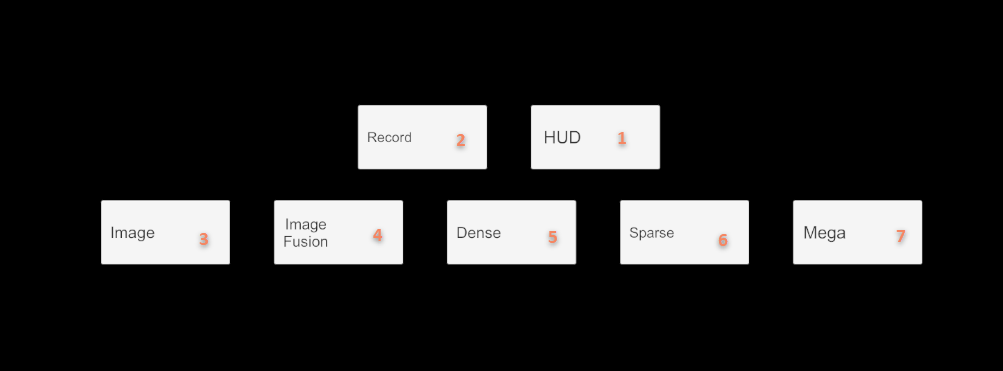

The sample comes with multiple buttons, and their specific functions are as follows.

- Button 1

HUD: Toggles the UI display mode. In the initial state, the UI is fixed in the real world. After enabling HUD, the UI will always appear in front of your eyes. - Button 2

Record: Toggles EIF recording. After enabling it, you must disable it to record a normal EIF file; otherwise, the recorded file will be unusable. - Button 3

Image: Toggles image tracking. - Button 4

Image Fusion: Toggles image tracking + motion fusion mode. - Button 5

Dense: Toggles dense spatial mapping. - Button 6

Sparse: Toggles sparse spatial mapping. - Button 7

Mega: Toggles Mega.

Function details

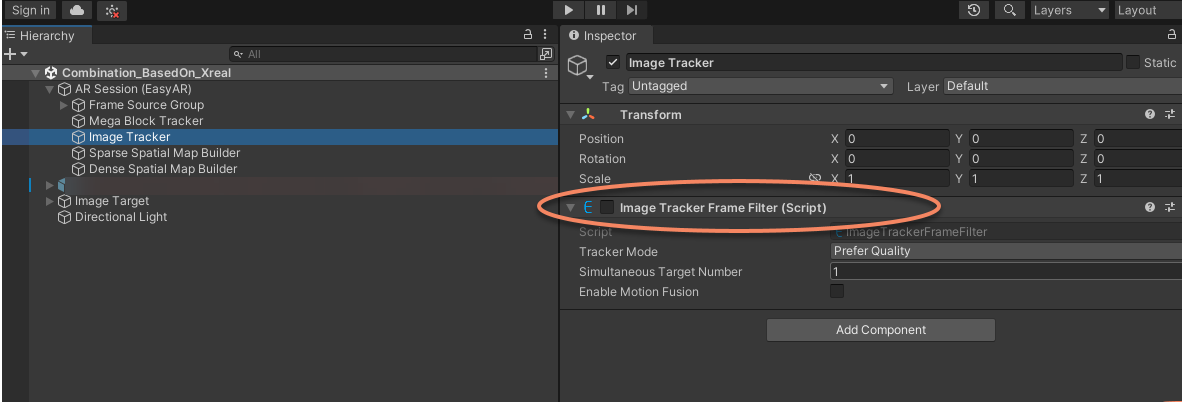

Default function switch

All functions are disabled by default when starting up, achieved by deactivating the corresponding scripts in the editor. Button operations control the activation/deactivation of the corresponding scripts. You can set the default enabled functions based on the samples you want to run.

Coordinate system origin reference

In the samples for motion tracking, a stationary panda model is placed at the origin of the coordinate system to check the motion tracking status. This model is helpful for decoupling issues. For example, when running Mega, some rapid drift is caused by the device's motion tracking (i.e., the device's own defect). In this case, the model will also drift along with it. You can adjust or remove this panda model as needed.

Using embedded image tracking targets

The samples preset the size of the image used for planar image tracking. You need to print namecard.jpg on A4 paper, ensuring the image is not stretched, cropped, and fills the paper as much as possible (as shown below).

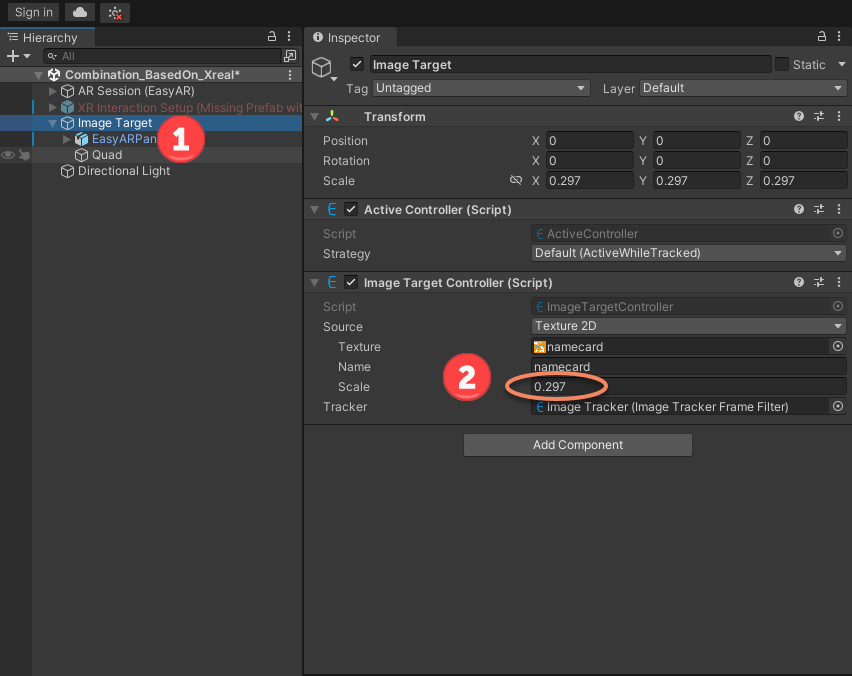

Measure the length of the namecard pattern on the printed paper. Based on the measurement results, set the

Scaleof theImage Targetin the Unity scene to match the actual physical size (in meters).

When

EasyAR motion fusionis enabled, only fixed-position (non-movable) images can be tracked. If motion fusion is disabled, the image cannot be tracked once it moves out of the field of view.Sometimes the headset's perspective may not accurately reflect the camera image size. If tracking fails, try moving the headset camera closer to the image. For practical use, it is recommended to track larger images, such as 5m*5m in size.

Note

On the headset, regardless of whether the EasyAR motion fusion function is enabled or disabled, the Scale parameter of the image target must be set to the actual physical size; otherwise, the displayed position will be incorrect.

Mega configuration

If you are using EasyAR Mega, you need to refer to Mega Unity quickstart.