Introduction to EasyAR Mega

EasyAR Mega is an edge-cloud collaborative spatial computing technology designed to create persistent, high-precision digital twin spaces for the entire physical world (e.g., a city, a campus, or a large shopping mall). With EasyAR Mega, your application can achieve large-scale, high-precision indoor and outdoor positioning and virtual-real occlusion, delivering an unprecedented spatial interaction experience for users.

This chapter will briefly introduce the core working principles, expected effects, and platform adaptation guidelines of EasyAR Mega from a developer's perspective.

Important

Non-developer users (e.g., product managers, operators, testers, etc.) can directly refer to the Mega User Guide to learn about Mega services.

Before you begin: Ensure localization service is ready

Before integrating EasyAR Mega functionality into your application, one core prerequisite must be met: The Mega cloud localization service is ready.

- On-site data collection is complete

- Use designated devices to collect data from the target area

- Use Mega Toolbox to collect EIF data for effect verification

- Mega Block mapping is complete

- Localization service is enabled and bound to the application

- Add the Block to the Mega localization library in the Developer Center

- Obtain a valid App ID and API Key and configure them correctly in your project

Important

If the above steps are not completed, the application will fail to obtain localization results, manifesting as "AR content never triggers." Be sure to verify service availability before development.

Basic principles of Mega localization

Unlike traditional GNSS localization that relies on satellite signals, EasyAR Mega is based on advanced visual localization technology. By matching real-time image data captured by user devices with pre-built high-precision 3D data, it determines the user's 6DoF pose in the physical world. Based on this pose, the application can render and overlay virtual content at the correct physical location.

The workflow is as follows:

Map construction:

- Use professional equipment (such as a panoramic camera) to collect data in the target area.

- Upload the collected data (such as .360 files) through EasyAR's mapping management backend.

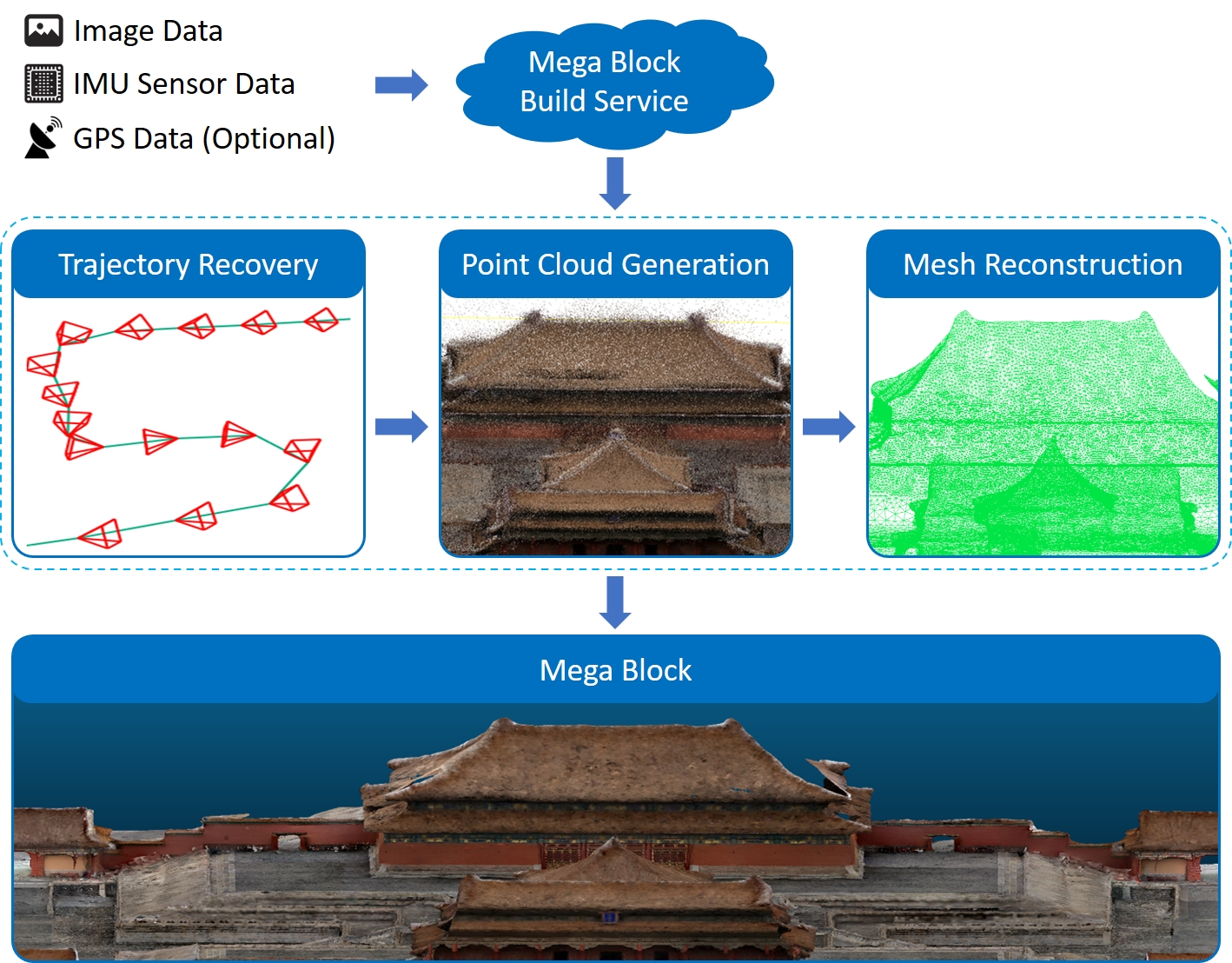

- The cloud processing platform will perform calculations on the images in the collected data, using advanced AI algorithms to extract visual features of the target area; and fuse the images with IMU sensor information to recover the motion trajectory during collection (i.e., the camera pose at each moment); then generate a 3D point cloud of the entire scene and construct a dense mesh with texture mapping.

- Finally, the mapping system will output a high-precision "Mega Block map" defined by EasyAR, which contains 3D geometric information and visual features. This map is the cornerstone of Mega localization.

- Use professional equipment (such as a panoramic camera) to collect data in the target area.

Real-time localization:

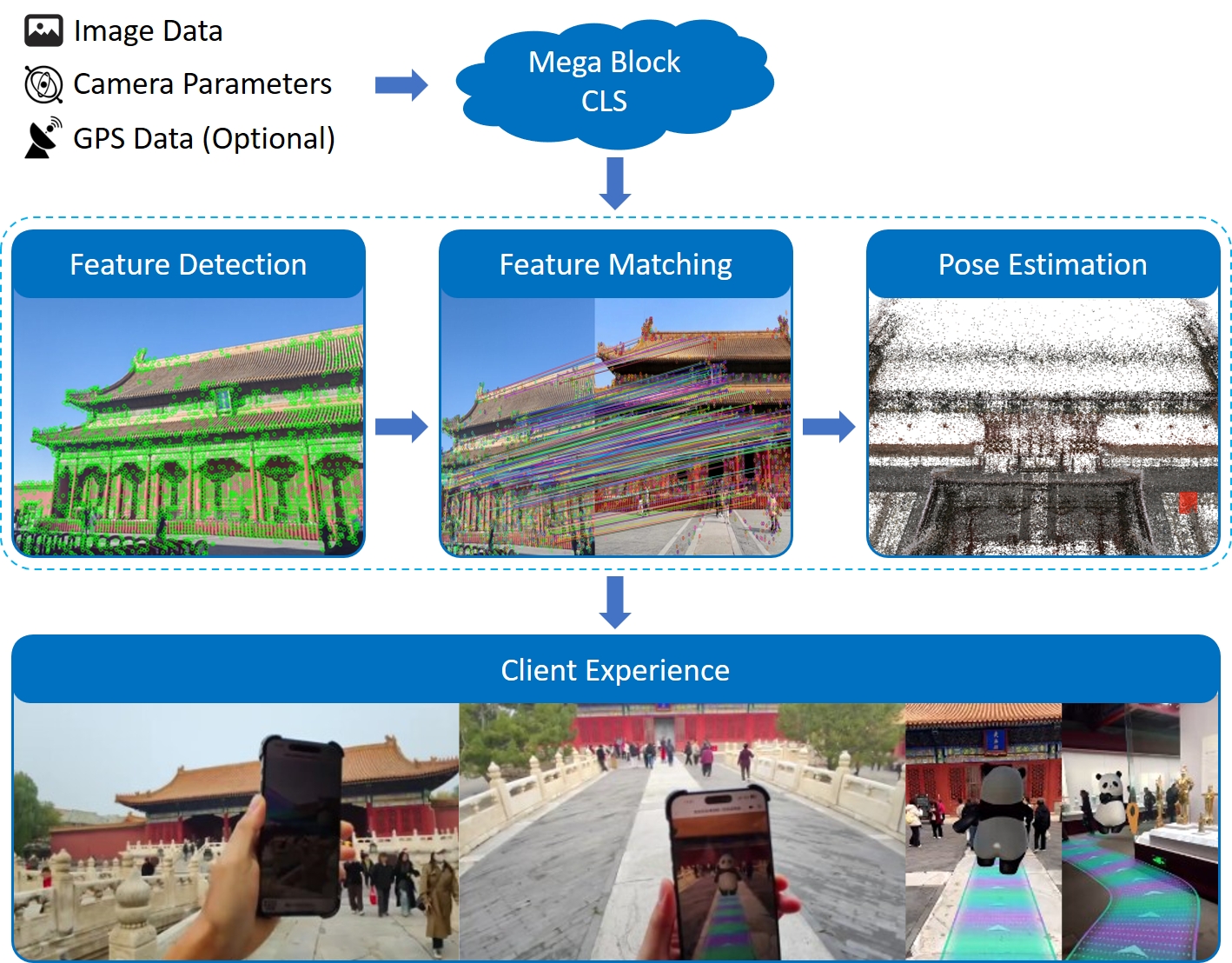

- The user opens the application, and the device camera captures real-time images of the user's field of view, which are sent to the Mega cloud localization service along with camera intrinsics, extrinsics (if any), and auxiliary information (if any, such as GNSS).

- The Mega cloud localization service will extract visual features from the uploaded images and quickly compare and match them with the Mega Block map in the localization library.

- Once a match is successful, the system can calculate the user's exact pose (i.e., position and orientation) in the map with centimeter-level accuracy.

- At this point, the Mega cloud localization will send the calculated pose to the application side, where it will be fused with the device's own SLAM system for tracking.

- Finally, the application side will obtain a real-time localized and continuously tracked pose, allowing virtual content to be displayed at pre-anchored positions in the physical world and updated continuously as the user moves.

- The user opens the application, and the device camera captures real-time images of the user's field of view, which are sent to the Mega cloud localization service along with camera intrinsics, extrinsics (if any), and auxiliary information (if any, such as GNSS).

Effects and expected results

After successfully integrating EasyAR Mega, your application can achieve the following stunning effects:

- Centimeter-level accuracy: Compared to GNSS's errors of several meters or even tens of meters, Mega positioning can deliver sub-meter to centimeter-level accuracy, allowing virtual content to be stably "anchored" to specific locations in the real world.

- Persistent spatial experience: Virtual content can be placed anywhere in the physical world, and all users see consistent content at the same location.

- Real-world occlusion: Through Mega's spatial understanding capabilities, virtual objects can be occluded by real buildings or obstacles, greatly enhancing immersion.

- Operation in GNSS-denied areas: In areas with weak or no GNSS signals—such as indoors, underground parking lots, urban streets surrounded by tall buildings, or forested mountains—Mega still provides stable and reliable positioning services.

The video shows a typical demonstration of EasyAR Mega's capabilities:

- High-precision, persistent spatial positioning allows virtual content to perfectly adhere to building surfaces, presenting stunning dynamic videos and meticulously designed giant 3D posters.

- Real-world occlusion enabled by spatial understanding makes fireworks blooming in the sky and digital effects blend harmoniously with the surroundings without dissonance.

- Empowered by advanced visual algorithms, the entire experience remains robust in complex, crowded environments and operates stably even at night.

Possible less than ideal situations

Slower positioning recognition

In densely populated areas like large event venues, network latency or concurrent requests may cause significant delays in Mega cloud positioning. Users might need to wait before virtual content appears.

Environmental changes causing errors

Significant physical environment changes (e.g., construction barriers, seasonal vegetation shifts) may reduce positioning accuracy or cause failures. Mega maps require regular updates to adapt to environmental changes.

Drift during sustained experiences

Mega positioning fuses with the device’s native SLAM system for continuous tracking, requiring the camera to remain active. Long-term operation may cause device CPU throttling, leading to frame stuttering, frame drops, or scale drift.

Tip

For more details on performance anomalies or failures, refer to the Troubleshooting section:

Extension suggestions

If you encounter issues such as service failures, scene changes, business expansion, or other non-programming-related problems during the integration of EasyAR Mega, please refer to our Mega User Guide.

In this guide, you can find:

- Service creation: Learn how to create Mega services and perform simple troubleshooting.

- Effect optimization: Understand how to preview runtime effects, collect abnormal data, and monitor cold starts.

- Persistent operations: Learn how to handle scene changes, business expansion, and migration/upgrade requirements for long-term operations.

- Business integration: Familiarize yourself with the use of practical business data such as navigation road networks.

- Reference resources: Operation manuals for practical tools like Mega Studio and Mega Toolbox.

Through this chapter, we hope you have gained a clear understanding of the working principles and effects of EasyAR Mega. Next, you can start preparing for your first Mega project!

Platform-specific guides

The integration of EasyAR Mega is closely tied to the platform. Refer to the following guides based on your target platform: